Midjourney Is The Next Netflix. Here's Why

Midjourney is on a path to become the next Netflix (and give YouTube a run for its money). Understanding why helps to shake free of some myths about generative AI.

Midjourney, with a self-funded team of eleven, is poised to be the next Netflix. They have all of the building blocks: traction, data, users and a clear brand.

The platform is best known for generating images from a text prompt. So how do you get from there to competing with Netflix?

First, you need to understand that there’s a blind spot in how we think about generative AI: it isn’t just a tool for creating old forms of media, it’s a new form of media itself.

Serendipity and Surprise

Spend some time using Midjourney, and you might first feel a little stuck with what to ask for, and then a bit frustrated with images that don’t quite match what you had in mind, and then you’ll probably ease into a sort of addictive fascination.

Start out requesting “dogs that can fly”:

And soon you’ll be imagining yourself a ‘prompt engineer’ by requesting:

a golden retriever catching a frisbee in mid-air, Central Park, New York City, action photography, texture, film grain, intricate hasselblad dslr RAW, sunset

As Midjourney processes your prompt, it comes into fuzzy view (this is the AI going through ‘steps’ from pure noise, through to an image):

Until you have two rows of four options (where you can choose to upscale the image or create variations):

Eventually arriving at this:

Perfect? No…seems like Midjourney has some trouble with dogs in action maybe? And so…you refine the prompt, try again, create more variations.

This interaction and attempt at refinement is the point. It IS the experience, as if you could replay your favourite movie a dozen different times.

Stories, Styles and The New Media

I mean, imagine Harry Potter if it was directed by Wes Anderson:

Or Pixar:

Are these just fun photos? A sort of AI-generated fan art?

Sure, but we’re starting to circle around something far more profound. Because it’s easy enough to focus on the final photo, just as it was easy enough to focus on the Queen or the Pope.

But that just highlights the challenge with shifts in media paradigms: we see things that are familiar. In this case, we focus on the ‘finished product’ (the photo, video, or piece of music) while missing that the creation process itself is where the ‘new media action’ sits.

Imagine:

You start to watch a Harry Potter movie and then say “Hey, Siri, switch this to a Wes Anderson style”

As you watch, a ‘bubble’ appears: it’s an alternate ‘prompt’ that another viewer created and that has gained a million ‘likes’. You activate the bubble, and Harry Potter is now a female lead.

Continuing, you get to the scene where they play Quidditch. A ‘remix’ icon appears, which lets you use voice prompts to change the construction of the field or the rules of the game.

Is the media the movie you’re watching? Maybe…because you can actually still consume it like you always have.

Is it now an ‘interactive movie’ like Netflix’s Bandersnatch episode of Black Mirror? In a way - except that the alternate plot lines were ‘prompted’ by other users.

But peeling away the layers, the prompts that you can generate yourself turn it into something else: a type of media which is a consuming experience enabled in part by a creative ‘intelligence’ with which you can have a conversation.

AI has enabled a new type of media where your consumption of content includes a conversational layer with a creative ‘intelligence’.

Emergence and Community

Invisible in all of the above are two points that are difficult to cognitively grasp, and which (I think) give rise to an AI-Mythos:

Your prompts don’t interact with AI systems alone.

Your prompts, and the reaction you give to the results, combine with everyone else, along with ever-growing large language models from the people who provide the AI in the first place.

Imagine hundreds of billions of data points, being added to daily, and then reinforced by people who respond to the outputs. The prompts you give today that result in a slight distortion around a dog’s eyes will no-doubt be better (and different) tomorrow.

The system is emergent, and ultimately unknowable

Those images that start to ‘fuzz’ into place when you prompt Midjourney are part of a vast probabilities generator. It uses all kinds of weights and adversarial networks and training to achieve the result, but it’s ultimately a random generator.

Once generated, you can start to finesse it - replacing the dog with a cat, say, or ‘tightening’ the refinements. But in its rawest form, generative AI feels as if it has a mind of its own.

In fact, at the deepest levels of the system, even the AI engineers don’t fully understand how it works.

***

These two properties are important for parsing AI as a type of media. Because they mean that:

“Stories” will have the property of allowing for emergent content that is a tension between communities of ‘viewers’/prompters and an AI with a creative process that is similar to but adjacent to our own (think about what that term ‘adjacent’ means, because it’s loaded with meaning).

There will never be a fixed point for an AI media product. In the ‘old days’, media would proceed through gates: write a book and it’s fixed; turn the book into a ‘fixed’ movie; spin-off a ‘fixed’ comic book. Sure, there might be fan art that helps to amplify the core IP, but the ‘canon’ is a fixed entity reflected in fairly rigid media.

But now, AI media will never arrive at a point that is fully fixed. Yes, media that is produced BY AI for distribution on other platforms might be fixed, but the ‘new media’ will be fluid, dynamic, emergent (and often best experienced in immersive spaces)

Media Transitions 101

In this last point is a paradox about AI: its ability to both be a tool for ‘old media’ production, and a media itself.

In other ways, AI-as-media will look a lot like previous paradigm shifts:

At first, newer media look like niche distribution systems based on some new technology. Whether books or radio, video cassettes or streaming, attention is first paid to solving issues at the technology layer.

As the technology ‘problem’ gets solved, the attention shifts to audiences and reach. How do you get books or streaming channels, TV broadcasts or ‘interactive media’ into more living rooms? One area of focus is on the interface in the belief that it will help solve the uptake problem, whether the size of a book or the design of a television set.

There’s a struggle to make sense of the ‘grammar’ of the new media/medium. Radio is a lot like stage plays, TV is a lot like radio shows, streaming is a lot like television.

And then, a hit comes along - something that leverages the new media in a way that suits it, and the new ‘film grammar’ becomes a way to attract a larger audience. The Jane Fonda Workout (yes, look it up!), the Sopranos, or an app like Instagram in the mobile era all come to mind.

What’s confusing about AI, however, is that it’s the first media to ‘loop back’ on the media that came before.

Television wasn’t a tool used to change how radio was produced; streaming wasn’t a production suite for broadcast television.

But AI is different. It is both a tool that will be used by filmmakers, book authors, photographers and musicians (or will replace them entirely) and a new form of media itself.

And so a lot of the coverage of AI focuses on its ability to create images for social media, books that can be sold on Amazon, or 3D objects that can be used in games.

But AI is itself a media.

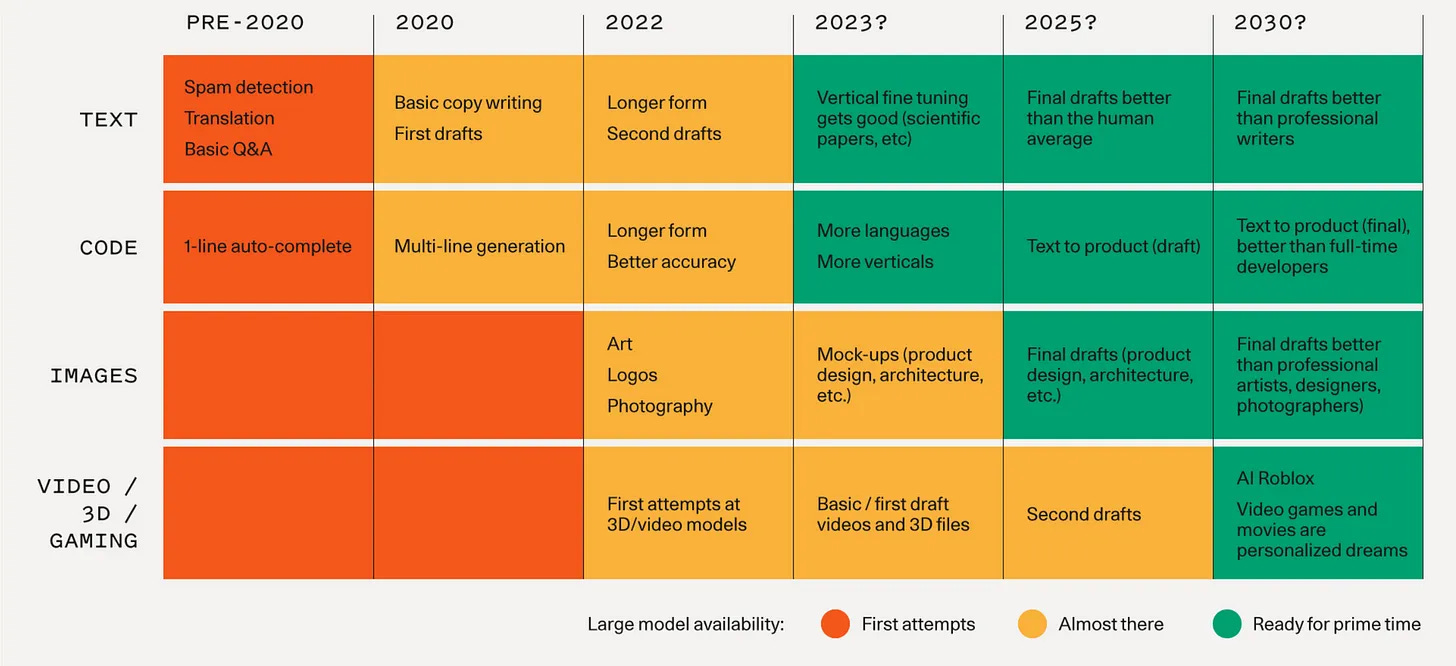

But as I outlined above, you can start to make out some broad hints of what’s to come. Others are also tying to imagine our AI-generated future:

Sequoia Capital calls the coming era one of ‘personalized dreams’

Scott Belsky, the founder of Behance and CPO at Adobe, imagines a new world of synthetic entertainment. He imagines 'fanfare as a service’ where AI beings celebrate your creative work (so, I guess, AI-generated movies can have AI-generated fans) and predicts that “we’ll see the first original AI-made Netflix show within the next 12-18 months”.

Jon Radoff imagines immersive spaces being focused on composability: the ease of integrating, linking and combining creative content, and that partly as a result of this we’ll see emergent storytelling.

Midjourney As A New Media

Midjourney is particularly well-suited to becoming the next Netflix for the AI-as-media era.

And I think it’s for a very simple reason which is that content is always king.

Others are focused on the tooling. Stability AI for example, provides the underpinning ‘tech’ for Midjourney (at least in part). OpenAI is all about the models, the tools, the APIs.

Yes, in the early days it might have been the producers of television sets, or radios, or VHS cassettes who made bank. But the big winners were the studios, the IP-holders.

So far, Midjourney has demonstrated a remarkable focus on improving the quality of its content and creating a flawless user experience.

This commitment is matched by the nascent ‘viewing channels’ it has created, forming up a sort of preliminary ‘broadcast’ platform with a million channels:

The site has a ‘rank pairs’ feature that feels a bit like the Netflix thumbs-up:

And so even at this early stage, you can see the outline of Midjourney not only as a tool, but a community platform, social network, and pseudo-broadcaster.

Content Advantages In Code

But it’s behind the scenes that Midjourney is building another kind of advantage. Because if interaction with AI is a new media, and if every new media needs great content (hits), then it’s important to understand how hits will be created.

This is a vast over-simplification of how Midjourney works, at least at one ‘layer’ (I’ll actually avoid how AI is trained etc):

LLMs Interpret Prompts. Prompts Are Data

Imagine you’re a television network but instead of just getting ‘ratings’ (how many people watched each show) you could also get access to every show they glanced at in the television guide. Or for a more modern example, imagine Tik Tok - and instead of just knowing what people view, you get deep data on the thinking and intent.

Midjourney isn’t just perfecting the ability to respond to prompts, it’s potentially gathering a powerhouse of insight into the types of content people prefer to create (and consume).

Now, this isn’t unique. The current hype around AI is largely the product of LLMs and their ability to understand the subtleties of human text.

But the platforms that have achieved scale (like Midjourney) are building increasingly large moats of audience insight (that can also be fed back into the machines).

Managing Checkpoints

Once you interpret the request, generative AI starts with a field of random noise, and through a series of steps predicts which pixels should be changed in order to arrive at a finished image that is somewhat close to your prompt. (Again…vastly simplified).

Checkpoints are a way to reduce the time and overhead in creating images making ‘saves’ at particular points, and then proceeding from the most promising results.

So, let’s say you want an “oil painting of a ship”. You can train the model and find the steps that lead to the best result. Throw in some user feedback (at scale) and you can both make your system faster, and improve the quality of the output.

Midjourney is creating a massive library of checkpoints (and styles/LORA/whatever else) that are like templates for ‘themes’. Imagine having the best template for “TV Comedy” and you get the idea.

An Aesthetic Engine

In fact, I think what’s remarkable about Midjourney is that it’s actually creating a massive aesthetic engine. It’s not MAKING those choices, but by having the scale that it does, it’s able to create continuous loops between community and machine, arriving at a sort of hive mind agreement on what we truly mean and want when we request a “fantasy-style illustration” or “fashion photograph”.

Midjourney is a bit more like HBO, in this sense, than Netflix. HBO became known for a certain quality of content, and Midjourney has a similar positioning, although all of those aesthetics exist in code rather than in a producer’s suite somewhere.

The Path To New Media Giant

Let’s compare the Midjourney audience to the first episode in the final season of Succession:

Today, over 275,000 images are created daily (a best guess), their site gets upwards of 4 million web views per month, and there are close to 15M members on its Discord server.

Now, it’s a far cry from the 100,000,000 photos on Instagram. But I prefer to think of it instead as a giant content generation focus group.

To become the ‘next Netflix’, Midjourney will need to learn the lessons from past paradigm shifts in media, and remember that while tech is important, and is the reason for the shift in the first place, but you win with a seamless user experience and amazing content.

Imagine if Midjourney:

Started to organize its content into channels. In the mood for horror? Anime? Flip to the type of content you prefer.

Created a consumption layer with a ‘gentle’ prompt capacity. Very much like my Harry Potter example above, what if I could go to the Midjourney site and just click a ‘remix this’ button without needing to struggle with learning how to ‘prompt’?

Partnered with great storytellers and started creating training models on specific universes. What would happen with a formally-endorsed Midjourney version of Harry Potter, with access to the core IP behind the books, media and games?

Created monetization layers for creators, both at the AI-as-media layer (prompt engineers and model training) and the output layers

Continued to keep a singular focus on beautiful user experiences, and brilliant content, as AI-generated video comes on stream.

The old media silos will gravitate to protecting their turf.

Sure, youTube might adapt some sort of AI-creation tool for the generation of video, or Netflix might even air a show created entirely from AI.

But like the paradigm shifts that came before, it will be the newer kids on the block who see beyond the old buckets and interfaces, genres and storytelling grammar.

Midjourney may have the eyes fresh enough, a scale big enough, and a vision large enough to realize that it can be the next Netflix for an era when AI is the next everything.

The header image is via Midjourney (duh). The post itself is hand-written.

I changed the name of the blog. Not that anyone will notice.

I love getting email and starting a conversation, and it’s more interesting when it’s with a real person than a chat box. Feel free to comment on the Substack app, email me at doug@bureauofbrightideas.com or message me on Twitter.

Let's chat.

This is incredible. You've gathered so many insights and painted such a solid portrait of what's to come. Gets me all the more excited to throw more prompts into the ring!